EMPATHETIC AI ™

Advancing the state-of-the-art of Empathetic Machines and Conversational AI

Why do we need Empathetic Conversational AI?

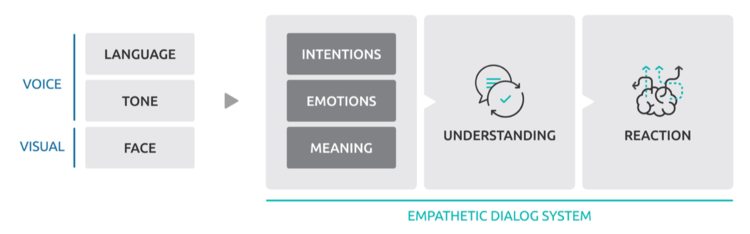

Why do we need Empathetic Conversational AI? We believe the world will be a better place if machines can learn to understand us better. To make this possible, we need to endow Conversational AI with empathy – the ability to extract emotional cues from tone of voice, speech, text, and facial expressions to guide system responses accordingly. This empathy module can be part of a pipelined, frame-based dialog system. Or it can be implicitly learned end-to-end in a neural conversational AI framework.

Empathetic Conversational AI is enabling a world of applications that were not possible before

Reduce danger on the road

Detect stress levels from drivers’ facial expressions and encourage them to take a break when stressed, tired, or sleepy

Find the right movie for everyone

Identify people in the room and analyze their moods through facial detection to suggest great movies to watch together

Communicate with customers better

Detect personality, emotions, and openness of customers to guide customer service and direct sales employees, increasing customer satisfaction and sales

Sense indirect intentions

For example, empathetic AI can understand that a user asking information about common cold, diabetes or any other medical condition is feeling unwell, and as such can proactively recommend a nearby doctor

Help with stress and depression

Understand people’s emotional states and guide them to do stress relieving exercises or proactively suggest therapeutic music to help them go through bad feelings

WHY EMOS?

The most comprehensive emotion recognition bundle

Our technology is leading the field with the most comprehensive capability to understand tone of speech, language, visual cues, stress level and personality factors. We are constantly improving our system by feeding our algorithms every day with new data.

The first solution to enable empathetic responses

We endow the machine with the ability to understand humans better through empathy. This allows you to automatically adapt functionalities depending on the user’s current mood, and as a result increase engagement with your products.

A multilingual platform

With a team of multi-national AI researchers, EMOS is founded on the belief that multilingual capability is crucial to our increasingly diverse and culturally complex world. Our language agnostic technology supports both English and Chinese and will soon extend to other languages.